Why List Crawling is Essential for Modern Data Collection

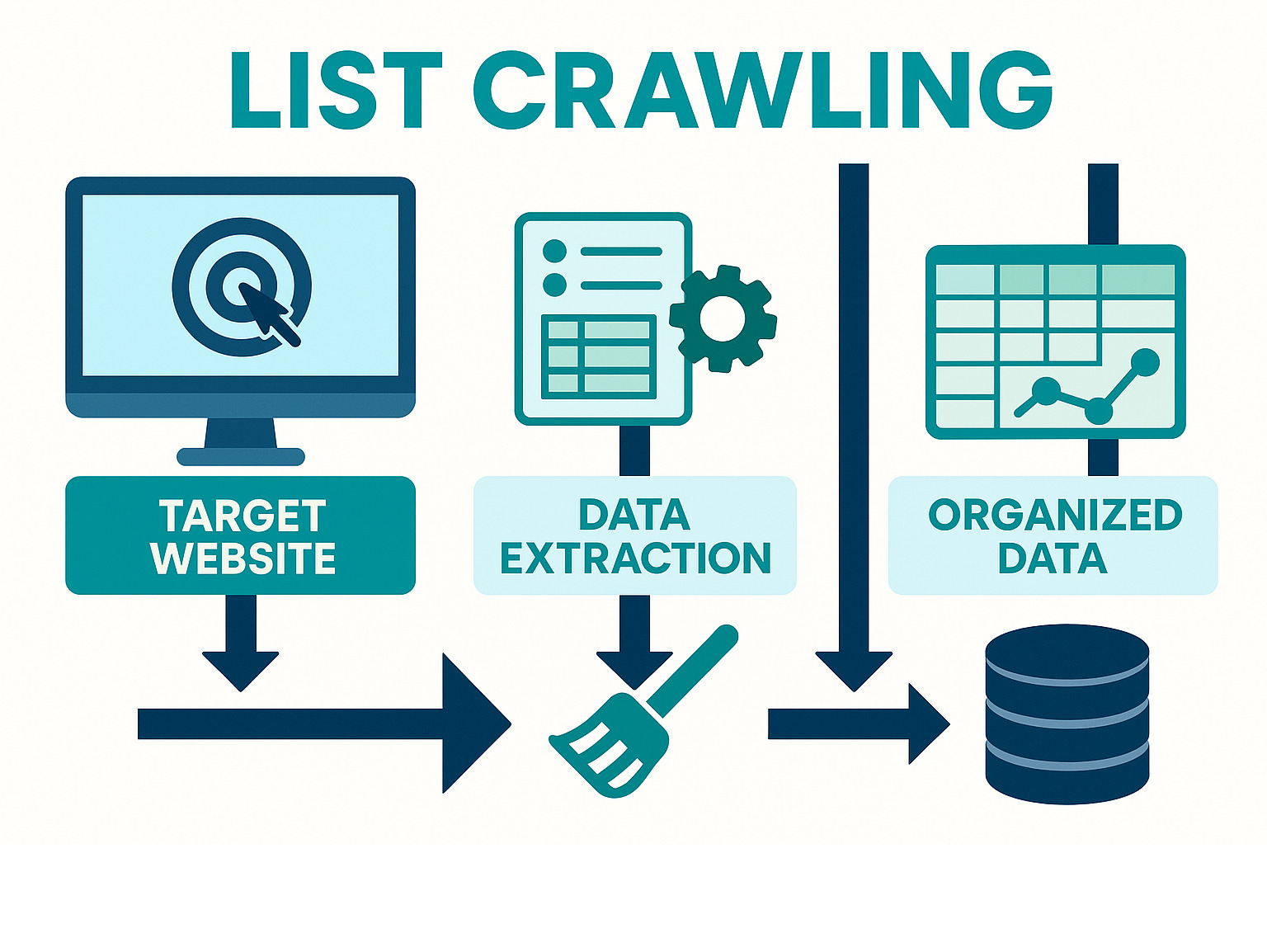

List crawling is a specialized form of web scraping that focuses on extracting collections of similar items from websites—think restaurant listings, menu prices, or review data from dining platforms. Unlike general web scraping that grabs random information, list crawling targets structured data that appears in lists, tables, or paginated results.

Quick Answer: What is List Crawling?

- Definition: Automated extraction of structured data from web lists and collections

- Key Difference: Focuses on repetitive data fields vs. general page content

- Common Targets: Business directories, product catalogs, review sites, search results

- Main Benefits: Saves time, reduces manual work, provides consistent data formats

- Popular Uses: Price monitoring, competitor research, lead generation, market analysis

For food and travel enthusiasts, list crawling is a game-changer. Instead of manually checking dozens of sites, you can automatically gather restaurant listings or menu prices in minutes.

“Businesses, researchers, and marketers use list crawlers to save time and get reliable insights and data.” This is especially valuable when tracking dining trends across NYC or building local food guides.

The process involves identifying target sites, configuring a crawler to steer them, and extracting data points like restaurant names, ratings, and addresses. The result is a clean, organized dataset that saves weeks of manual work.

Whether you’re researching the best ramen in Manhattan or tracking food trucks in NYC, list crawling automates hours of research, collecting data while you sleep.

What is List Crawling and Why It Matters

List crawling is an essential web scraping tool for collecting structured data. It focuses on extracting collections of similar items, perfect for researching the best dim sum in Chinatown or tracking menu prices across different NYC neighborhoods.

A list crawler automatically steers pages with repetitive data, like restaurant directories. It does the heavy lifting, gathering comprehensive data on New York’s dining scene in hours, not weeks.

This approach is efficient and cost-effective. For our local food guides, list crawling lets us systematically collect restaurant names, addresses, and ratings from multiple sources, ensuring we find every hidden gem.

The applications for foodies are endless: Market research to analyze pricing trends, competitor analysis to see what other platforms feature, and lead generation to find new restaurant partners.

The Key Difference: General Scraping vs. Focused List Crawling

While general web scraping casts a wide net, list crawling is laser-focused on structured collections. It’s the difference between photographing a whole newspaper and carefully extracting just the restaurant reviews.

General scraping might pull unstructured text from a food blog post. List crawling, however, targets structured elements—neat rows of restaurant listings, organized tables of menu prices, or paginated venue profiles.

This focus is crucial for handling complex website features. Many dining platforms use infinite scroll to load more restaurants. A list crawler simulates this scrolling to capture every listing. Similarly, it handles pagination automatically, clicking through each page of a directory methodically.

This targeted approach is especially powerful for culinary data collection. Whether extracting menu prices or gathering restaurant reviews, list crawling ensures we get complete, structured datasets.

How List Crawling Powers Food and Travel Insights

The real excitement is turning raw web data into actionable food and travel intelligence. Living in New York City, we see how dining trends shift rapidly between neighborhoods.

Trend analysis becomes incredibly powerful when you can track data across hundreds of restaurants. Are plant-based options expanding into Queens? Is the average brunch price rising in Brooklyn? List crawling provides the data to answer these questions confidently.

Price monitoring is a game-changer for culinary travel planning. By crawling menu data, we can spot seasonal price changes and identify the best-value neighborhoods for food tourists.

When building local food guides, list crawling ensures our recommendations are comprehensive and current. We gather fresh data on hours, contact details, and recent reviews from multiple platforms.

Aggregating user-generated content from review platforms and forums adds authenticity, capturing genuine diner experiences and insider tips.

Perhaps most exciting is our ability to identify hidden gems by crawling smaller, local review sites before they hit the mainstream.

For sophisticated data collection, we rely on proven tools like Scrapy, which provides a robust framework to handle the scale of modern web data extraction. This ensures our list crawling operations deliver reliable insights for our food-loving community.

The Core Process: A 4-Step Method for Successful Data Extraction

When I started using list crawling for restaurant data in NYC, it seemed complicated. But breaking it down into four straightforward steps makes it manageable, like following a recipe.

This four-step method will guide you through extracting structured data from any website. I’ve used this exact process to build dining guides for neighborhoods across Manhattan and Brooklyn, and it works every time.

Step 1: Identify Targets and Define Data Points

First, define what data you need and where to find it. This planning step is crucial and saves hours of frustration. Identify your target websites, such as business directories, e-commerce sites, or review platforms. The key is finding publicly accessible data.

Next, you’ll need to peek under the hood using your browser’s developer tools (right-click and select “Inspect Element”). This reveals the website’s HTML code. You’re looking for patterns.

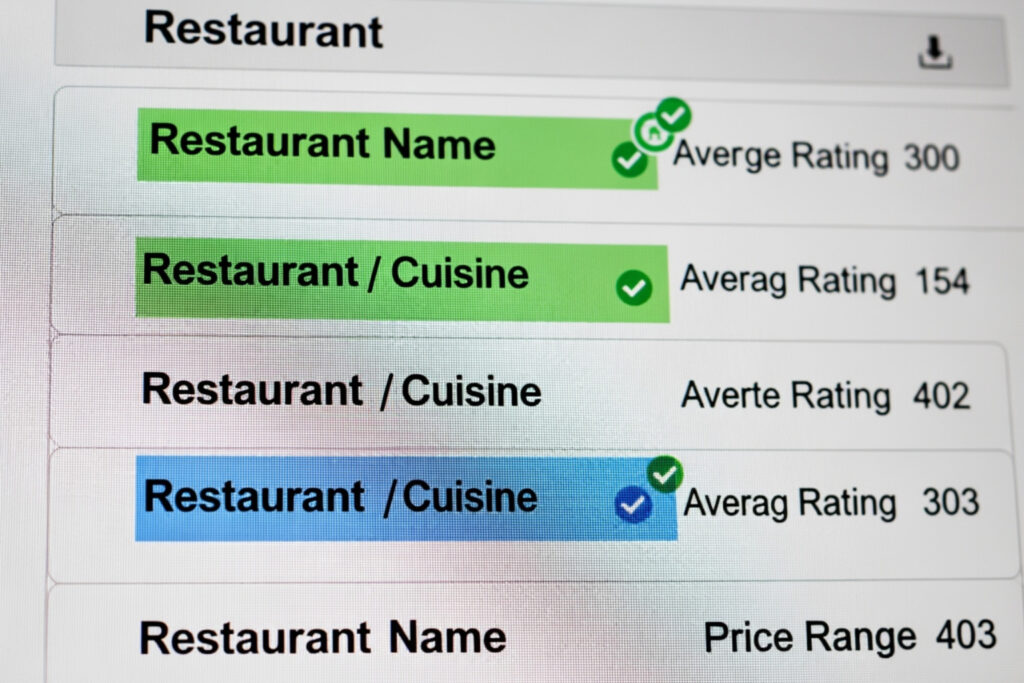

During HTML inspection, I find CSS Selectors and XPath expressions. These are like addresses that tell your crawler where to find information. A restaurant’s name might be in a CSS class like .restaurant-name, while its rating is at //div[@class="rating"]/span/text().

For a typical restaurant listing, you’ll define data fields like name, address, phone number, cuisine, rating, and price range. Your crawler will collect these from every listing.

Step 2: Configure and Execute the Crawler

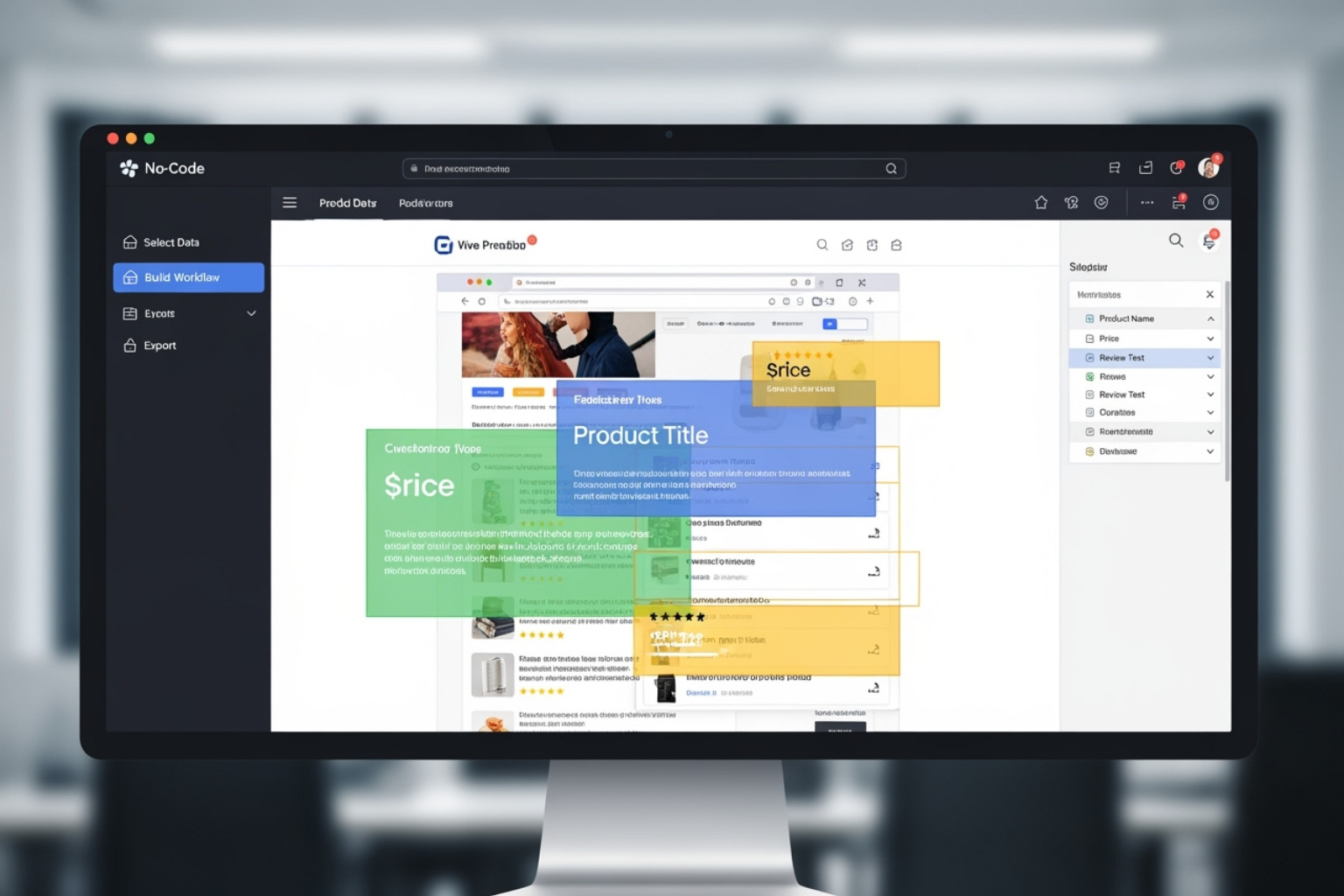

Now, set up your crawler. With modern list crawling tools, you don’t need to write complex code from scratch.

Crawler setup begins with choosing the right tool. No-code tools with point-and-click interfaces are user-friendly for beginners. More complex projects may require specialized tools that handle JavaScript-heavy websites.

Next, configure your crawling parameters. Specify the starting URLs, set up navigation rules for moving between pages, and define which data to extract using the selectors you identified.

Pagination handling is a key step. Your crawler must be smart enough to click “next page” buttons or handle infinite scroll, which often requires headless browsers that simulate human scrolling.

When running the crawl, always test on a few pages first. Once you’re confident it works correctly, you can scale up to capture thousands of listings.

Common data points for restaurant extraction:

- Name and address details

- Rating and price range indicators

- Cuisine type and contact information

- Website URL and review links

- Operating hours and brief descriptions

Step 3: Extract, Clean, and Structure the Data

This step turns messy, raw data into something useful. Data extraction pulls raw information, but it’s rarely perfect. You’ll find duplicates, formatting issues, and irrelevant content.

Data cleaning is your best friend here. Removing duplicates ensures each restaurant appears once. Formatting correction standardizes phone numbers, addresses, and prices. Data validation catches obvious errors, like an impossible rating.

Once clean, store your data in a useful format. CSV files work well for simple datasets, while JSON is better for complex, nested information. For large projects, databases offer powerful management capabilities.

This cleaning process is crucial. A sophisticated crawler is useless if the final dataset is full of errors. For more guidance, check out our comprehensive resource guides.

Step 4: Analyze and Visualize Your Findings

This is where list crawling generates real insights. After collecting and cleaning, you can finally see what the numbers are telling you.

Data analysis reveals patterns impossible to spot manually. You might find that top-rated Vietnamese restaurants cluster in specific Brooklyn neighborhoods or find the average dinner price in Midtown Manhattan. These insights come from your structured dataset.

Creating reports and visualizations brings findings to life. Charts, maps, and graphs make complex data immediately understandable.

Dashboards offer real-time monitoring of new restaurant openings, review score changes, or seasonal menu trends across the city.

The goal is actionable intelligence. For us, this means identifying dining trends, mapping culinary hotspots, and providing data-driven recommendations that help our community find amazing dining experiences.

Overcoming Common Problems in List Crawling

List crawling is powerful but has its challenges. Websites use dynamic content and anti-scraping measures. Here are methods to overcome them for guaranteed results.

Method: Handling Dynamic Content and Anti-Scraping Measures

Modern websites often load content dynamically with JavaScript or AJAX, which can challenge traditional crawlers. Many sites also actively block bots.

- JavaScript Rendering: Use tools that execute JavaScript, like a real browser. Selenium and Puppeteer are excellent for this, allowing page content to fully render before extraction. This is crucial for “infinite scroll” pages.

- Handling CAPTCHAs: These “prove you’re not a robot” challenges can stop a crawler. While some services can solve them, the best defense is to crawl respectfully to avoid triggering them.

- IP Rotation: Websites may block a single IP address making too many requests. Using proxy servers rotates your IP, making requests appear to come from different users. This helps evade bans and mimics natural browsing.

- User-Agent Rotation: Websites can also block specific user-agents (which identify your browser). Rotating these makes your crawler appear as if it’s coming from various browsers and devices, reducing detection risk.

Method: Optimizing Crawler Performance and Scale

Efficiency is key for large-scale data collection. Optimizing performance ensures you get data quickly without overburdening the target website.

- Limiting Request Frequency: Sending requests too quickly can get you blocked. We configure our crawlers with a

DOWNLOAD_DELAY(e.g., 1-3 seconds between requests) to mimic human browsing. - Parallel Crawling: Instead of crawling one page at a time, run multiple requests concurrently. Frameworks like Scrapy handle this asynchronously. Settings like

CONCURRENT_REQUESTS_PER_DOMAINmanage the load on a single site. - Error Handling: Robust crawlers include retry mechanisms for failed requests, exponential backoff to avoid overwhelming a server, and detailed logging to diagnose and fix issues.

- Respecting

robots.txt: This file (www.example.com/robots.txt) provides rules for web robots. Ethical crawlers always check and obey these rules. We ensure our crawlers respect these directives by enabling settings likeROBOTSTXT_OBEY. - Responsible Crawling at Scale: For large sites, responsible crawling is paramount. We adjust settings like

USER_AGENT,DOWNLOAD_DELAY, andAUTOTHROTTLE_ENABLEDfor dynamic request throttling. For more details, consider resources like A Guide To Web Crawling With Python.

Method: Navigating Legal and Ethical Waters

This is the most important aspect of list crawling. Collecting data responsibly and legally is non-negotiable.

- Terms of Service (ToS): Always review a site’s ToS. Some explicitly prohibit automated data collection. Ignoring them can lead to legal issues.

- Data Privacy Laws: Comply with regulations like GDPR and CCPA. This means avoiding sensitive personal information (PII) and practicing data minimization by only collecting what you truly need.

- Server Load: A poorly configured crawler can overwhelm a website’s server. Limit your request speed to avoid impacting the site’s performance for other users.

- Public vs. Private Data: Distinguish between publicly available data (like restaurant hours) and private data behind a login. Our focus is always on public, non-sensitive information.

Frequently Asked Questions about List Crawling

Here are answers to common questions about list crawling to help you get started on your data collection journey.

What are the best beginner-friendly tools for list crawling?

Beginner-friendly list crawling tools don’t require coding expertise to extract valuable restaurant or travel data.

No-code tools are game-changers for newcomers. Visual scrapers like Octoparse and ParseHub have intuitive interfaces. You can click on a restaurant name, rating, or price, and the tool learns to extract that same information from hundreds of similar listings.

Browser extensions are another gentle entry point. They are perfect for quick data grabs while researching dining spots and often export directly to a spreadsheet.

For those comfortable with some code, Python libraries offer incredible flexibility. The learning curve is manageable, especially when motivated by automating data gathering on the best ramen shops in different neighborhoods.

How does list crawling contribute to Search Engine Optimization (SEO)?

List crawling is incredibly valuable for SEO, especially in the competitive food and travel space. It helps you understand the digital landscape and create better content.

- Competitor monitoring: Automatically track what topics competitors are covering and which restaurants they feature to identify gaps in your own content strategy.

- Keyword research: Crawl Search Engine Results Pages (SERPs) to gain deep insights into search intent.

- Building data-driven content: Create definitive guides, like a list of NYC’s highest-rated restaurants, by gathering data from multiple sources. This comprehensive content naturally attracts links and shares.

- Broken link detection: A crawler can scan your site (or a competitor’s) to find dead links, helping you maintain a good user experience that search engines reward.

As research confirms, “List crawling is also very useful in SEO because it detects broken links and checks competitors.” It’s like having a research assistant constantly gathering intelligence to improve your search visibility.

How should I handle websites that block my crawler?

Getting blocked is a common part of list crawling. Websites are simply protecting themselves. There are several respectful ways to work around these obstacles.

- Slow down: The simplest solution is to slow your request rate. A

DOWNLOAD_DELAYof 5-10 seconds between requests makes your activity seem more human. - Rotate user-agents: Make your crawler appear as different browsers (Chrome, Firefox, etc.) to avoid creating a pattern that triggers blocks.

- Use proxies: This is a very effective method. By rotating your IP address, each request appears to come from a different location, preventing any single IP from being flagged.

- Respect

robots.txt: Always check a website’srobots.txtfile. If it says certain areas are off-limits, respect those boundaries. It’s an essential ethical and legal practice. - Use headless browsers: Tools like Playwright can be programmed to mimic human behavior, such as natural scrolling and mouse movements, making your crawler more convincing.

The goal is to collect data responsibly while respecting the resources and rules of the sites you visit.

Conclusion

List crawling has transformed from a technical tool into an essential method for understanding the digital food landscape. At The Dining Destination, we’ve seen how it helps us find the best culinary experiences in New York City and beyond.

Instead of spending hours on manual research, list crawling lets you gather comprehensive data automatically. It’s like having a tireless research assistant who works around the clock.

We’ve covered the key differences between general scraping and focused list crawling, walked through the four-step process for successful data extraction, and tackled common challenges like dynamic content and anti-scraping measures, all while emphasizing the importance of ethical data collection.

The future of list crawling is bright. AI and Machine Learning are making data extraction smarter and more intuitive. This means easier data extraction for everyone, from seasoned analysts to beginners starting their first culinary research project.

For us, this evolution means delivering richer insights to our community. We’re focused on building unique culinary guides that dive deep into pricing trends, emerging food scenes, and the hidden neighborhood gems that make dining adventures memorable.

Whether you’re tracking ramen trends in Manhattan or comparing brunch prices across Brooklyn, list crawling gives you the power to turn scattered web information into actionable insights. It’s about understanding the stories that data tells and sharing those findings with fellow food lovers.

Ready to dive deeper into data-driven food findy? Explore our comprehensive resource guides for more data-driven travel tips and start your own journey into the fascinating world of culinary data.